- PRODUCTS

- COMPANY

- SUPPORT

- PRODUCTS

- BY TYPE

- BY MARKET

- COMPANY

- SUPPORT

Scaling AI Means Scaling Interconnects

This article is part two in a series on talks delivered at Accelerated Infrastructure for the AI Era, a one-day symposium held by Marvell in April 2024.

Interconnects have played a key role in enabling technology since the dawn of computing. During World War II, Alan Turing used the Turing machine to perform mathematical computations to break the Nazi’s code. This fast—at least at the time—computer used a massive parallel system and numerous interconnects. Eighty years later, interconnects play a similar role for AI—providing a foundation for massively parallel problems. However, with the growth of AI comes unique networking challenges—and Marvell is poised to meet the needs of this ever-growing market.

What’s driving interconnect growth?

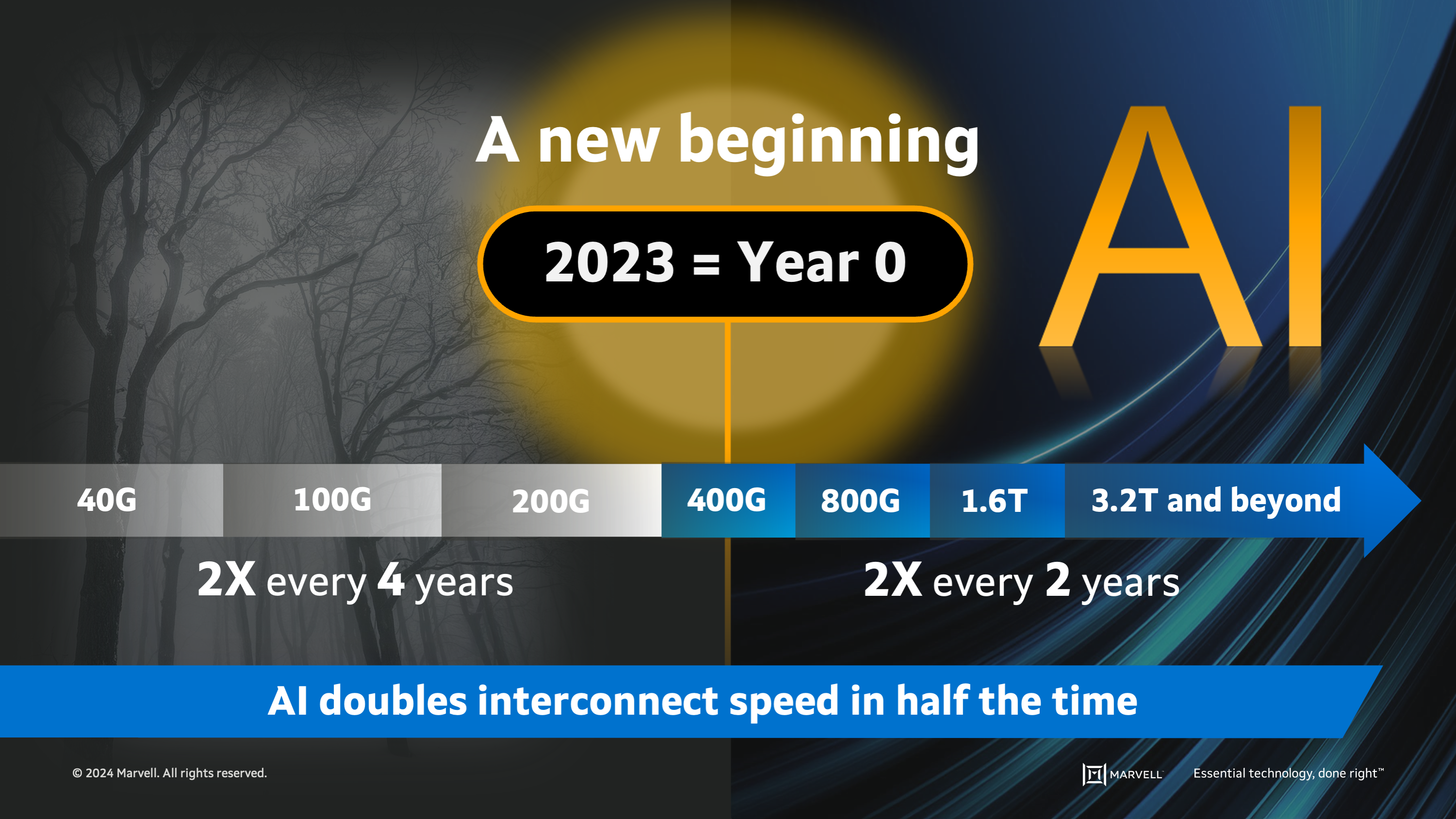

Before 2023, the interconnect world was a different place. Interconnect speeds were driven by the pace of cloud data center server upgrades: the upgrades occurred every four years so the speed of interconnects doubled every four years at the same time. In 2023, generative AI took the interconnect wheel, and demand for AI is driving speeds to double every two years. And, while copper remains a viable technology for chip-to-chip and other short reach connections, optical is the dominant medium for AI.

“Optical is the only technology that can give you the bandwidth and reach needed to connect hundreds and thousands and tens of thousands of servers across the whole data center,” said Dr. Loi Nguyen, executive vice president and general manager of Cloud Optics at Marvell. “No other technology can do the job—except optical.”

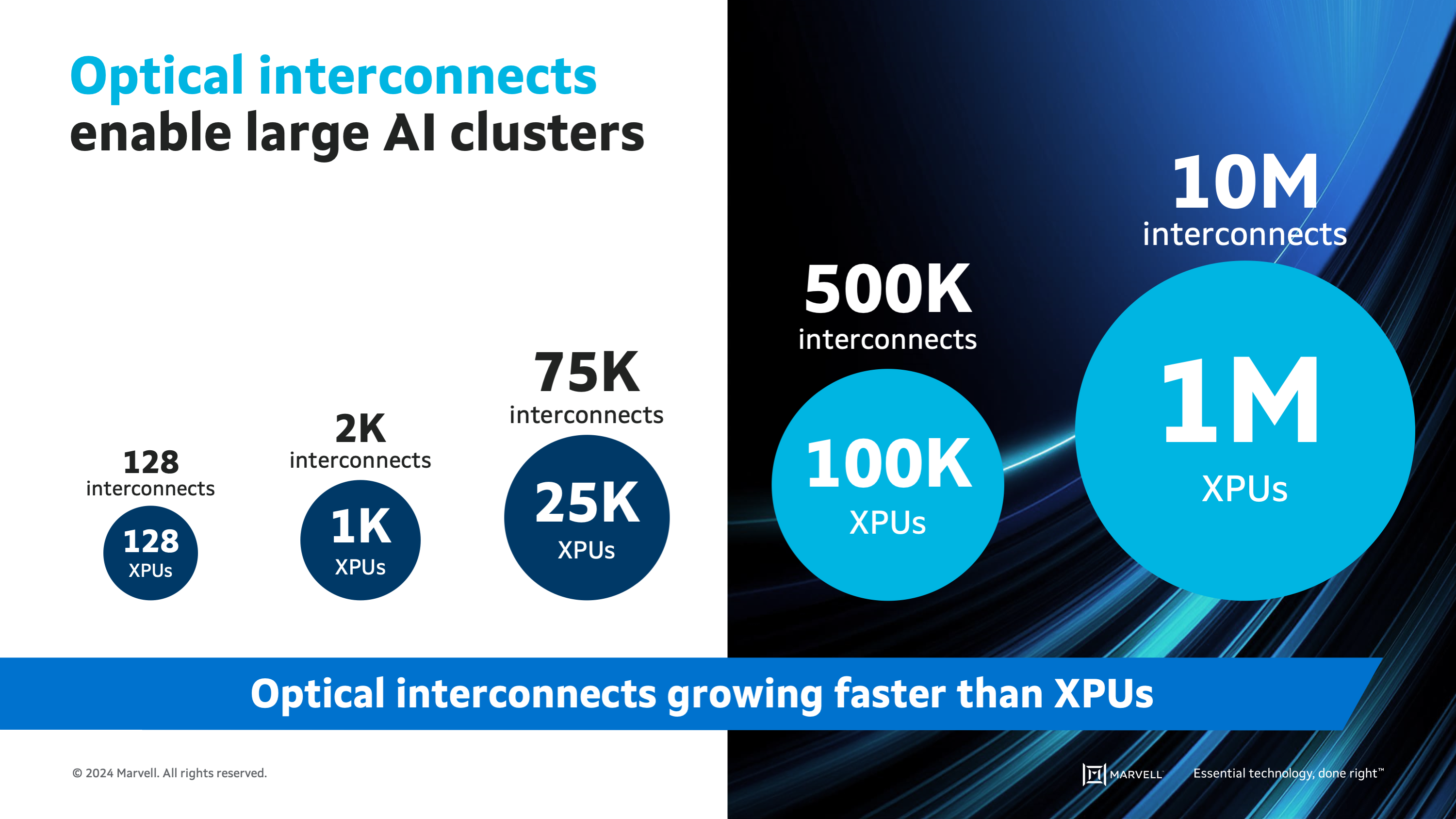

Along with getting faster and spanning greater distances, interconnects will dramatically increase in number. Chat GPT3 was trained on a cluster with approximately 1,000 accelerators that required 2,000 optical interconnects. Chat GPT4 was trained on a 25,000-accelerator cluster with about 75,000 optical interconnects.

“100K clusters will be available soon and that may require five layers of switching and 500,000 optical interconnects. People are talking about a million (accelerator) clusters, I can’t even imagine that, but those are the kind of numbers that people are talking about today and that may require millions of optical interconnects in a single AI cluster,” Nguyen added. “These numbers should only be used as a guide…(but) no matter how you look at it, the optical interconnects will grow faster than the accelerator growth in an AI cluster.”

Optical interconnects will grow faster than XPUs, at 5-1 or even 10-1 ratios.

And on top of all this comes sheer demand: hyperscale operators are rapidly expanding their footprint to provide the necessary training and inference capacity. Their current data centers aren’t optimized for these workloads. Additionally, concerns about data sovereignty and power consumption mean that this wave of AI infrastructure will be dispersed across the globe, leading to demand for more interconnects inside data centers and between data centers.

The network effect

To keep up with AI applications, new data centers will require accelerated infrastructure, specifically accelerated connectivity—in the form of interconnects.

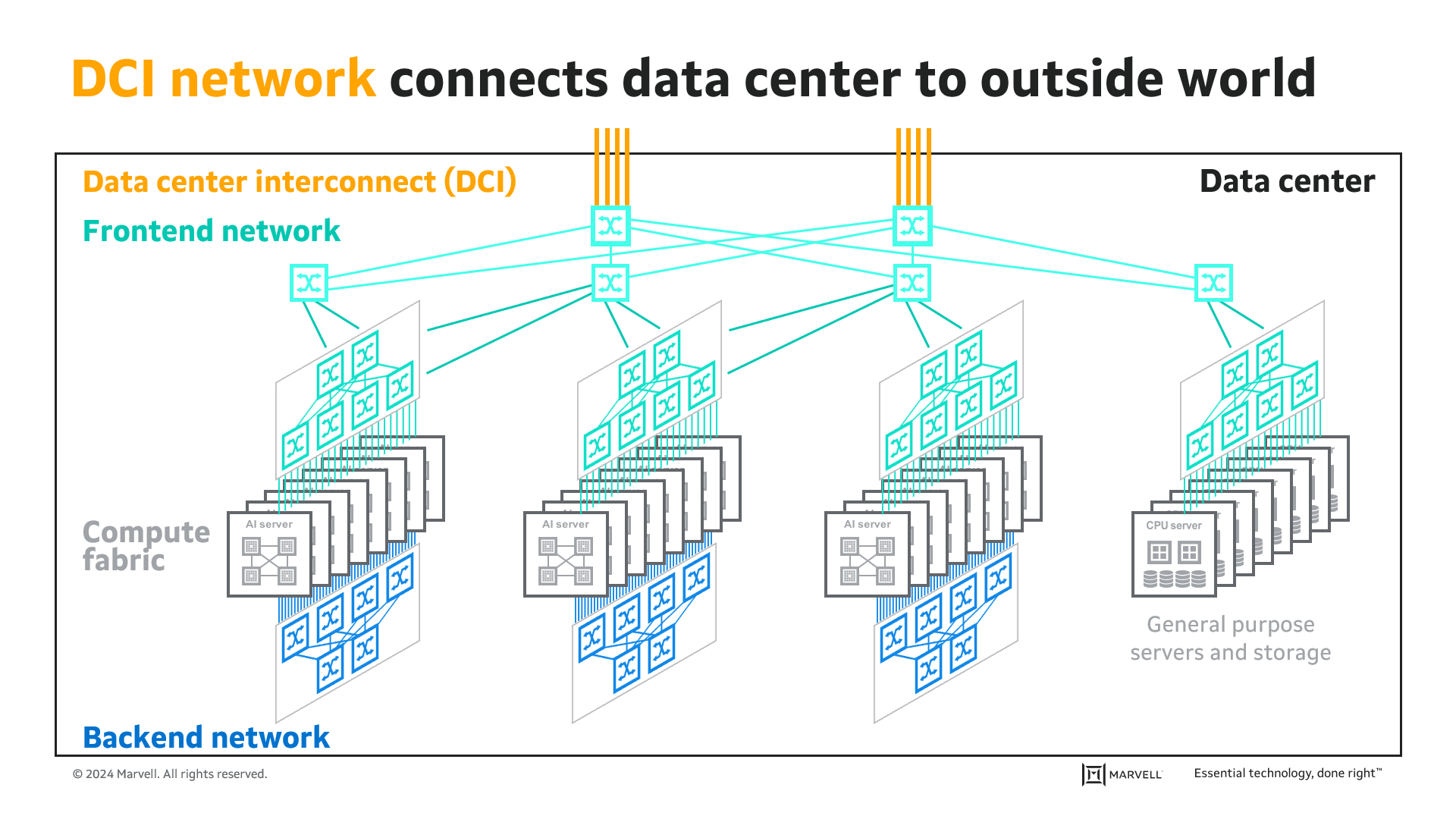

There are four networks needed for AI:

- The compute fabric connects AI accelerators, GPUs, CPUs, and other components inside servers. This fabric is designed to run at high speeds over short distances and generally relies on copper using PCIe or proprietary interfaces such as NVLink.

- The backend network uses layers of interconnected network switches and optical modules to connect the servers mentioned above into AI clusters. The networking protocol is either InfiniBand or Ethernet, but both protocols run on optical modules. “Whenever you see InfiniBand or Ethernet, you should think about the back-end network running over optical,” Nguyen said.

- The frontend network connects AI clusters to the cloud data center for storage, switching, and more. The CPUs located inside an AI server move data in and out, and each CPU has its own NIC that is connected to its own optical module. The frontend network always uses Ethernet protocol over optical.

- Finally, the data center interconnect (DCI) network connects one data center to others in the region using 100km or longer links.

AI servers are connected over the backend network using two layers of switching optical links, shown in blue. Frontend CPUs, shown in green, connect AI servers to the rest of the data center. DCI interconnects, shown in yellow, connect the data center to the outside world.

The AI interconnect opportunity is massive, and Marvell solutions are creating the building blocks of data centers to solve these network challenges so AI can continue to scale.

To read the first blog in this series, go to The AI Opportunity.

# # #

Marvell and the M logo are trademarks of Marvell or its affiliates. Please visit www.marvell.com for a complete list of Marvell trademarks. Other names and brands may be claimed as the property of others.

This blog contains forward-looking statements within the meaning of the federal securities laws that involve risks and uncertainties. Forward-looking statements include, without limitation, any statement that may predict, forecast, indicate or imply future events, results or achievements. Actual events, results or achievements may differ materially from those contemplated in this blog. Forward-looking statements are only predictions and are subject to risks, uncertainties and assumptions that are difficult to predict, including those described in the “Risk Factors” section of our Annual Reports on Form 10-K, Quarterly Reports on Form 10-Q and other documents filed by us from time to time with the SEC. Forward-looking statements speak only as of the date they are made. Readers are cautioned not to put undue reliance on forward-looking statements, and no person assumes any obligation to update or revise any such forward-looking statements, whether as a result of new information, future events or otherwise.

Tags: AI, AI infrastructure

Recent Posts

Archives

Categories

- 5G (12)

- AI (26)

- (26)

- Cloud (14)

- Coherent DSP (8)

- Company News (101)

- Custom Silicon Solutions (6)

- Data Center (48)

- Data Processing Units (22)

- Enterprise (25)

- ESG (6)

- Ethernet Adapters and Controllers (12)

- Ethernet PHYs (4)

- Ethernet Switching (39)

- Fibre Channel (10)

- Marvell Government Solutions (2)

- Networking (34)

- Optical Modules (13)

- Security (6)

- Server Connectivity (25)

- SSD Controllers (6)

- Storage (22)

- Storage Accelerators (2)

- What Makes Marvell (38)

Copyright © 2025 Marvell, All rights reserved.

- Terms of Use

- Privacy Policy

- Contact