- PRODUCTS

- COMPANY

- SUPPORT

- PRODUCTS

- BY TYPE

- BY MARKET

Compute - test

Networking

- Automotive

- Coherent DSP

- DCI Optical Modules

- Ethernet Controllers

- Ethernet PHYs

- Ethernet Switches

- Linear Driver

- PAM DSP

- PCIe Retimers

- Transimpedance Amplifiers

Storage

Custom

- COMPANY

Our Company

Media

Contact

- SUPPORT

The AI Opportunity at Marvell

This article is part one in a series on talks delivered at Accelerated Infrastructure for the AI Era, a one-day symposium held by Marvell in April 2024.

Two trillion dollars. That’s the GDP of Italy. It’s the rough market capitalization of Amazon, of Alphabet and of Nvidia. And, according to analyst firm Dell’Oro, it’s the amount of AI infrastructure CAPEX expected to be invested by data center operators over the next five years. It’s an historically massive investment, which begs the question: Does the return on AI justify the cost?

The answer is a resounding yes.

AI is fundamentally changing the way we live and work. Beyond chatbots, search results, and process automation, companies are using AI to manage risk, engage customers, and speed time to market. New use cases are continuously emerging in manufacturing, healthcare, engineering, financial services, and more. We’re at the beginning of a generational inflection point that, according to McKinsey, has the potential to generate $4.4 trillion in annual economic value.

In that light, two trillion dollars makes sense. It will be financed through massive gains in productivity and efficiency.

Our view at Marvell is that the AI opportunity before us is on par with that of the internet, the PC, and cloud computing. “We’re as well positioned as any company in technology to take advantage of this,” said chairman and CEO Matt Murphy at the recent Marvell Accelerated Infrastructure for the AI Era investor event in April 2024.

Accelerated computing requires accelerated infrastructure

Data center AI investment is flowing and is expected to continue to flow to semiconductor companies, which are taking the lion’s share of that investment. In the Marvell case, fiscal year 2024 (started January 29, 2023, and ended February 3, 2024) AI revenue was $550 million, nearly tripling FY 2023 AI revenue, driven primarily by connectivity.

The reason: The accelerated computing that generative AI requires is impossible without underlying accelerated infrastructure. That accelerated infrastructure moves, stores, and processes the data required to keep these systems up and running. And accelerated infrastructure silicon is what Marvell does.

How AI is driving demand for accelerated connectivity

AI is the most data-hungry application the world has ever seen. Consequently, AI data center infrastructure requires accelerated compute, and accelerated connectivity, and accelerated storage. Unlike other applications, AI applications (think training, for example) can’t easily fit on a single processor. AI requires interconnected processors—sometimes thousands or even tens of thousands of them—which means compute and connectivity are fundamentally linked.

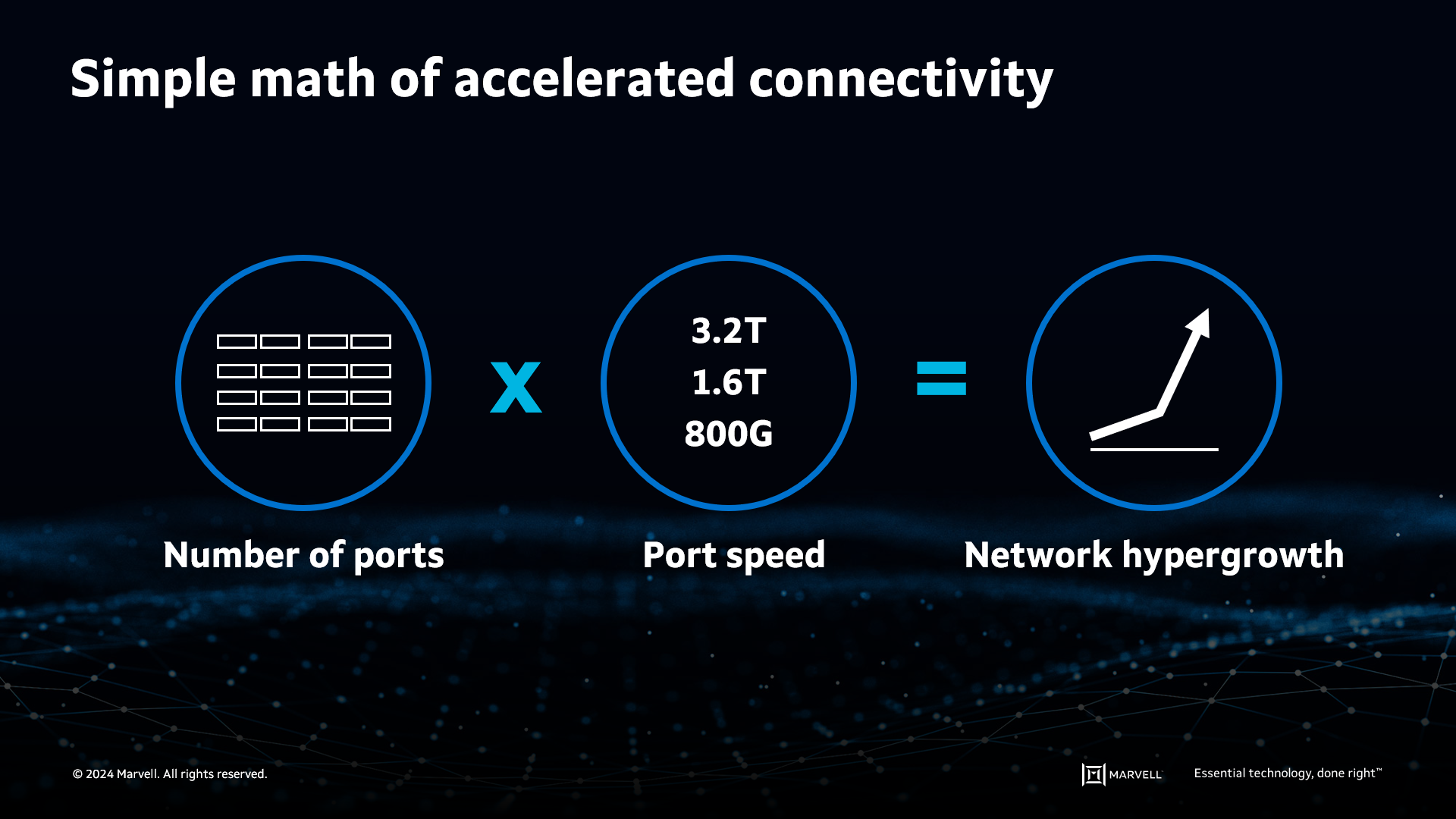

As data center computing power expands to accommodate AI demand, it’s easy to see the effect on the network. More accelerators mean more switches and NICs, with more ports to be connected. Faster accelerators mean more bandwidth flowing across the network. The product of these two variables is exponential growth in the accelerated connectivity infrastructure that makes up the AI network.

Accelerated connectivity is poised for exponential growth.

Partnering with customers for custom compute

In 2018, following the acquisition of Cavium, Marvell announced its intention to develop compute products for AI in the data center. The following year, we brought to market new products that met those data center operators’ needs but did not reflect an emerging trend: each one of these companies planned to make custom silicon their compute priority. To better address this need, we acquired Avera and further invested organically to expand our custom silicon strategy and platform.

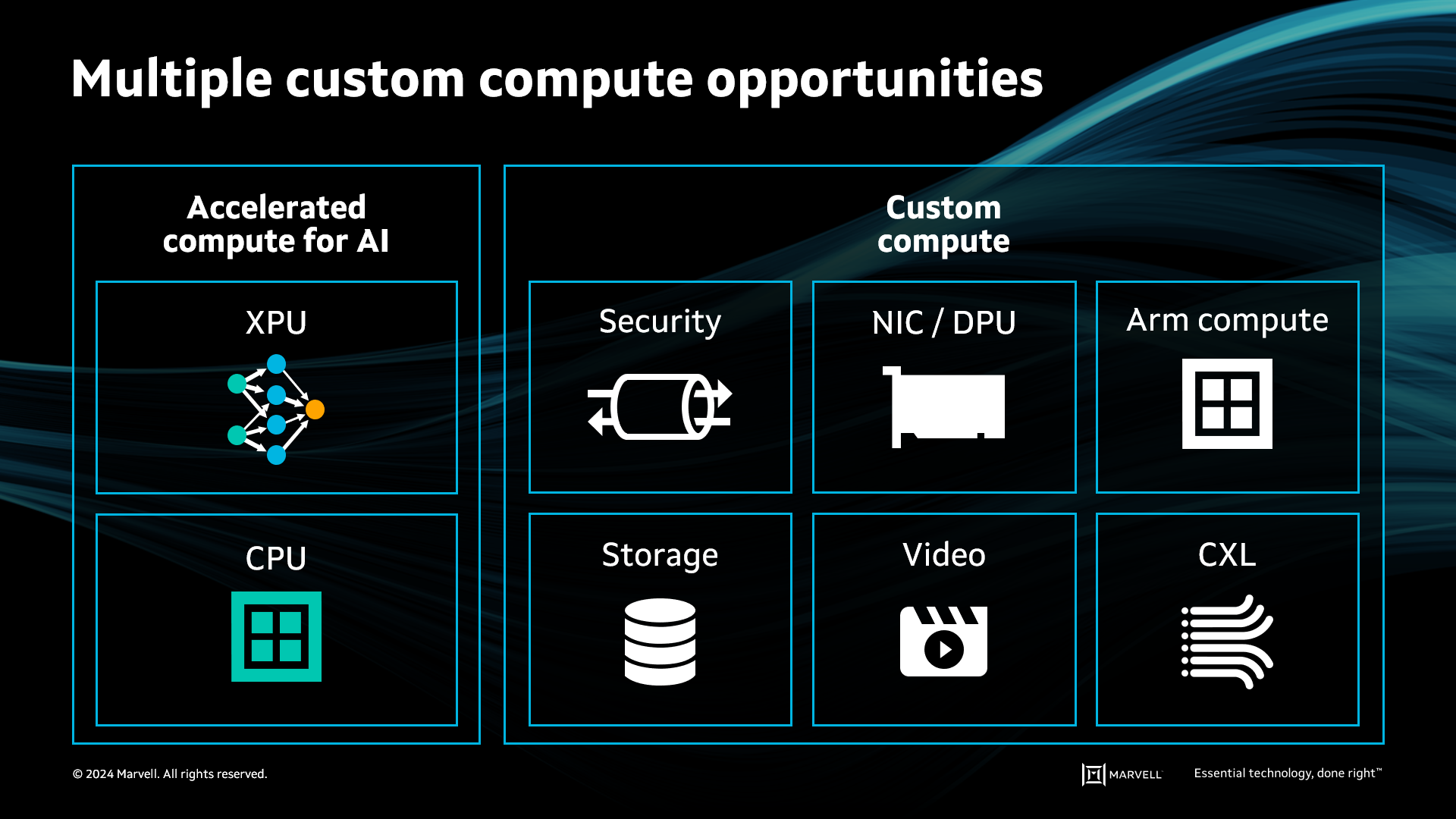

Given the broad opportunity for custom silicon within the AI compute category, we’re poised to benefit from these trends. Marvell is working with multiple customers on multiple applications that will span multiple generations. And we’ve recently won a new design with a third US-based hyperscale operator. The design win funnel is up by a factor of eight since the inception of the Marvell custom business just five years ago.

Custom compute opportunities span numerous applications, companies, and generations.

Investing in AI for the long term

Last year, the total data center market for accelerated custom compute, connectivity (which encompasses switching and interconnect), and storage was $21 billion. The Marvell share of that market was approximately 10%. Based on the views of multiple analysts, we estimate that same market will reach $75 billion by 2028. While the market may end up being larger, or smaller, we’re confident in our ability to maintain our high-share position in storage and interconnects, while growing our share in Ethernet switching and custom compute.

Of course, doing so requires continued investment commitment. Marvell is building some of the most complex digital products in the world by investing in such areas as leading-edge process nodes, advanced packaging, and silicon photonics. Equally importantly, we’re working closely with our customers to co-architect their next-generation data centers.

“By having the strategic position on the custom compute side, we gain insight into the next-generation architecture requirements,” Murphy says. “Not just for custom compute but for all the connectivity, all the higher-layer switching, and our customers’ overall plans for their next-generation AI architectures.”

The remarkable progress we’ve seen in AI over the last 18 months would have been quite literally impossible just a few short years ago. And we are in the very early days of a new technology that will change how we live and work in ways that we can’t yet imagine. AI technology is unfolding inside the world’s data centers as we speak, everywhere enabled by accelerated infrastructure.

At Marvell, we’re investing in our collective AI-driven future.

# # #

This blog contains forward-looking statements within the meaning of the federal securities laws that involve risks and uncertainties. Forward-looking statements include, without limitation, any statement that may predict, forecast, indicate or imply future events, results or achievements. Actual events, results or achievements may differ materially from those contemplated in this blog. Forward-looking statements are only predictions and are subject to risks, uncertainties and assumptions that are difficult to predict, including those described in the “Risk Factors” section of our Annual Reports on Form 10-K, Quarterly Reports on Form 10-Q and other documents filed by us from time to time with the SEC. Forward-looking statements speak only as of the date they are made. Readers are cautioned not to put undue reliance on forward-looking statements, and no person assumes any obligation to update or revise any such forward-looking statements, whether as a result of new information, future events or otherwise.

Tags: AI, custom computing, AI infrastructure

Recent Posts

Archives

Categories

- 5G (12)

- AI (18)

- Automotive (26)

- Cloud (9)

- Coherent DSP (2)

- Company News (102)

- Custom Silicon Solutions (2)

- Data Center (41)

- Data Processing Units (22)

- Enterprise (25)

- ESG (6)

- Ethernet Adapters and Controllers (12)

- Ethernet PHYs (4)

- Ethernet Switching (35)

- Fibre Channel (10)

- Marvell Government Solutions (2)

- Networking (31)

- Optical Modules (9)

- Security (5)

- Server Connectivity (20)

- SSD Controllers (6)

- Storage (22)

- Storage Accelerators (2)

- What Makes Marvell (28)

Careers

Worldwide

Copyright © 2023 Marvell, All rights reserved.

- Terms of Use

- Privacy Policy

- Contact